lately i’ve been going through crafting interpreters by bob nystrom using racket and its got me thinking a lot about code gen in java vs lisps. the book uses java and there’s some use of it to template out common patterns. it’s about as awkward as i expected it to look. but i’m not writing to pick on java – mostly to appreciate the special niche that lisp continues to occupy

in java, if you want to generate a set of classes, you have to write a class that can output strings that will ultimately represent a valid java program. at runtime, you may have a method called generateObject that accepts some arguments and outputs the blueprint of a class. the output will be strings or even files but they are not being generated and compiled at the same time. another stage of compilation on this outputted source will need to be performed.

i don’t mean to pick on java. this separation of code generation and compilation in meta-programming (meta because we’re using a program to produce more source code) is common in most statically typed languages.

dynamically typed languages and interpreted languages such as ruby and python support program generation and execution of the generated code both at runtime.

for example, ruby supports defining methods dynamically at runtime that becomes available to the rest of the system via define_method and it can even evaluate arbitrary ruby code using functions like class_eval. these definitions are parsed and executed at runtime.

however, any sufficiently complex program can only be represented as strings and thus the only way to manipulate them is as plain strings.

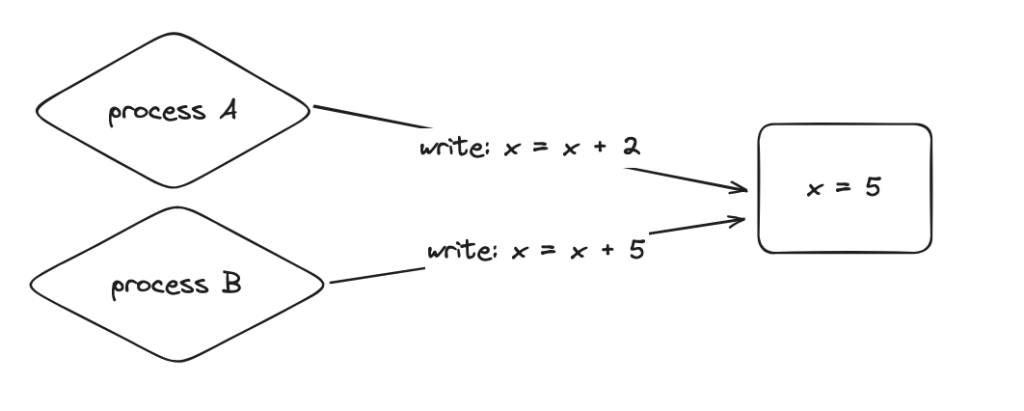

in both situations, whether dynamic or static, languages have

- a representation of a program in a particular language that conforms to a special grammar, usually EBNF

- a set of primitive data structures that can be manipulated at program runtime

and the actual program representation does not conform to the same structures as its data structures. in other words, the language does not allow the program itself to be treated as a data structure – because the literal representation of the program itself (that a programmer sees) is not a data structure but rather just text strings.

take ruby as an example

class Animal

def initialize(name, sound)

@name = name

@sound = sound

end

def make_sound

puts "#{@name} says #{@sound}!"

end

end

# Creating an instance of the Animal class

cat = Animal.new("Cat", "Meow")

# Calling the make_sound method

cat.make_sound

that’s a representation of a ruby program – in no way does that resemble any of the data structures in ruby such as arrays or dictionaries.

program source representations as part of a interpretation or compilation process do eventually undergo transformations (i.e class_eval) that turn plain source text into data structures that can be manipulated. for example, the lexing and parsing phases of compilers product syntax tree data structures that can in fact be expressed with the same primitive structures supported by the language itself.

the keyword here is “eventually”. the program as it is represented before any compilation or parsing occurs is not a data structure and cannot be manipulated as such. this is as true for ruby as it is for java

lisp

the one exception to this is lisp. the fancy academic word used to describe this unique position held by lisp regarding the discrepancy between the languages external representation and its data structures is homoiconicity.

lisp is homoiconic because lisp programs manipulate s-expressions and are also written in s-expressions.

here’s a simple demonstration using a dialect of lisp (racket scheme) where we define an original program (as a list) and then we transform the program literally before eval’ing it.

#lang racket/base

(define original-program '(+ 1 2 3))

(define reshaped-program (list (car original-program) 4 5 6))

(define ns (make-base-namespace))

(eval reshaped-program ns)since our original-program is just a list, it can be manipulated like any other list using functions like car. notice here how there’s no distinction between a manipulated program and the surrounding program representation. it’s all just lists. that is code as data.

this power extends not just to arbitrary program eval and manipulation – lisp also lets you extend its syntax in new ways to support custom language features that are not built into the language. these are known as macros. again, since the language is made up of s-expressions, any new formulation or semantic of the language can likewise be expressed in s-expressions and can be expanded and eval’d as if they were any other data structure during runtime, without having to drop into a “compilation” stage that converts something more primitive like strings into an AST

the whole language is an AST!

i’ve long wondered what the yinyang symbol of lisp represented and it’s actually from structures and interpretations from MIT. the yin yang represents eval and apply in the metacircular evaluator from the textbook. the metacircular evaluator is basically a lisp interpreter written in lisp – it is lisp evaluating itself through the use of both eval and apply.