“cache” is an extremely overloaded term in docker land nowadays because docker supports different types of caching. ill cover the three main cache terms – what they are and when they’re useful

build cache

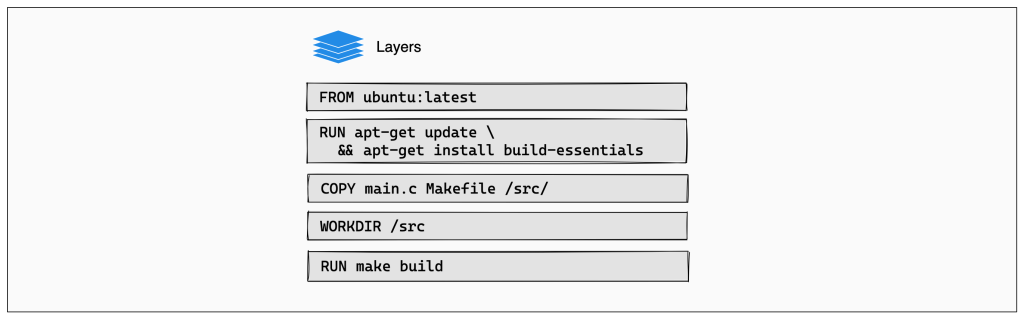

this is what most people think when you mention a docker cache. the build cache caches built image layers with the cache key being a docker instruction and/or the checksum of file contents. not all instructions create new image layers that contribute to final size of image – basically any of the commands that can write to the filesystem like COPY, ADD, RUN will create new layers. any FROM command that pulls in another image will also be a new layer.

you don’t need to do anything special for the builder to make use of build cache – this just comes for free. you can try it yourself. if you build a dockerfile once, run it again and you’ll see that it skips the steps if nothing changed. invalidation happens automatically as well. if the instruction changes, cache is invalidated. if a file part of a COPY command changes, cache is invalidated.

the build cache is probably most useful locally when you’re working on a dockerized application and using the same internal cache on your host machine.

external (build) cache

this is just another form of a build cache, except instead of relying on the builders internal cache you can use an external cache storage. once you start building images in a ci/cd environment that may be running your image builds on different instances, it may not be sufficient to rely on the default internal build cache to store an image. one run of your build will cache its images on its own machine but a subsequent run on a different executor machine will rebuild because it has its own internal docker cache. this can be wildly inefficient

the solution for this docker offers external cache backends. these can be fully remote ones like s3 where you specify a bucket and region or more local (but outside of buildkits internal cache) cache stores that store the cache in a specific directory on the host machine

many companies run dev workflows using github actions and use gh actions as their primary ci/cd pipeline, and docker allows you to point the cache to githubs cache.

cache mount

finally, we have cache mounts. these are are newer addition to build caches and they’re often confused with build caches because of a couple of reasons

- there’s cache in the name

- this cache is available only during image build – not too different from build caches

so what’s the difference? you’re not using the cache to store image layers, you’re using it in a more narrow sense to store artifacts produced by a RUN command. cache mounts are intended to cache results of RUN instructions so that any subsequent executions of that command during build time will go faster.

it’s used by specifying --mount-type=cache and specifying the location. at the time of writing, you cannot specify an external storage for this cache so this is entirely internal to the builder on the machine. anyway here’s an example

FROM node:latest

WORKDIR /app

COPY package.json ./

RUN --mount=type=cache,target=/root/.npm npm installin the above, the first ever build will copy package.json and install the NPM dependencies into the /root/.npm path of dockers internal cache. if package.json changes and both the COPY and RUN layers are invalidated, the NPM install will run a lot faster in the new build step because the dependencies are found in the cache.

as far as i know, at the moment supplying an external cache via --cache-from to the builder has no effect on the source of the cache mount. this makes it not very useful in situations like ci/cd where you’re dealing with ephemeral executors. you might be using an external build cache like s3, but cache mounts storage will still be internal to the builder instance on the machine. yeah, confusing.

once i actually learned the differences between these, searching docs became far easier. if i need to understand details on the build cache which caches image layers, ill search for build cache, image cache, or external cache. if i’m writing a new dockerfile that needs to cache dependencies for a particular build instruction to optimize local build times, i’ll look up cache mounts if i need an assist on the api